One more day, one more piece of ChIP-Seq software to cover. I've not talked about FindPeaks, much, which is the software descended from

Robertson et al, for obvious reasons. The paper was just an application note - and well, I'm really familiar with how it works, so I'm not going to review it. I have talked about

Quest, however, which was presumably descended from

Johnson et al.. And, for those of you who have been following ChIP-Seq papers since the early days will realize that there's still something missing: The aligner descended from

Barski et al, which is the subject of today's blog: SISSR. Those were the first three published ChIP-Seq papers, and so it's no surprise that each of them followed up with a paper (or application note!) on their software.

So, today, I'll take a look at SISSR, to complete the series.

From the start, the Barski paper was discussing both histone modifications and transcription factors. Thus, the context of the peak finder is a little different. Where FindPeaks (and possibly QuEST as well) was originally conceived for identifying single peaks, and expanded to do multiple peaks, I would imagine that SISSR was conceived with the idea of working on "complex" areas of overlapping peaks. Although, that's only relevant in terms of their analysis, but I'll come back to that.

The most striking thing you'll notice about this paper is that the datasets look familiar. They are, in fact the sets from Robertson, Barski and Johnson: STAT1, CTCF and NRSF, respectively. This is the first of the Chip-Seq application papers that actually performs a comparison between the available peak finders, and of course, claim that theirs is the best. Again, I'll come back to that.

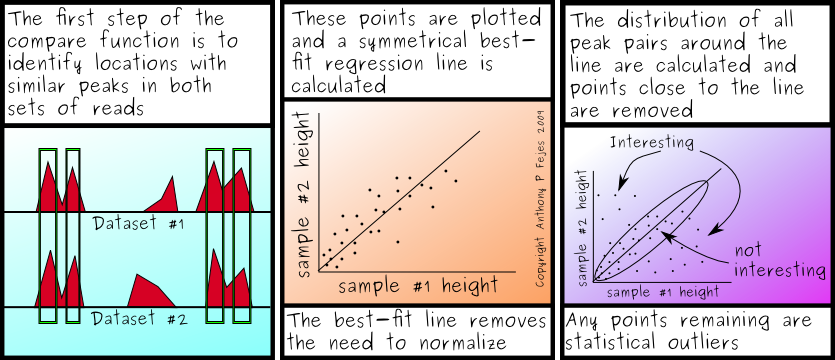

The method used by SISSR is almost identical to the method used by FindPeaks, with the use of directional information built into the base algorithm, whereas FindPeaks provides it as an optional module (-directional flag, which uses a slightly different method). They provide an excellent visual image on the 4th page of the article, demonstrating their concept, which will explain the method better than I can, but I'll try anyhow.

In ChIP-Seq, a binding site is expected to have many real tags pointing at it, as tags upstream should be on the sense strand, and tags on downstream should be on the anti-sense strand. Thus, a real binding site should exist at transition points, where the majority of tags switch from the sense to the anti-sense tag. By identifying these transition points, they will be able to identify locations of real binding sites. More or less, that describes the algorithm employed, with the following modifications: A window is used, (20bp default) instead of doing it on a base-by-base basis, and parameter estimation is employed to guess the length of the fragments.

In my review of QuEST, I complained that windows are a bad idea(tm) for ChIP-Seq, only to be corrected that QuEST wasn't using a window. This time, the window is explicitly described - and again, I'm puzzled. FindPeaks uses an identical operation without windows, and it runs blazingly fast. Why throw away resolution when you don't need to?

On the subject of length estimation, I'm again less than impressed. I realize this is probably an early attempt at it - and FindPeaks has gone through it's fair share of bad length estimators, so it's not a major criticism, but it is a weakness. To quote a couple lines from the paper: "For every tag i in the sense strand, the nearest tag k in the anti-sense strand is identified. Let J be the tag in the sense strand immediately upstream of k." Then follows a formula based upon the distances between (i,j) and (j,k). I completely fail to understand how this provides an accurate assessment of the real fragment length. I'm sure I'm missing something. As a function that describes the width of peaks, that may be a good method, which is really what the experiment is aiming for, anyhow - so it's possible that this may just be poorly named.

In fairness, they also provide options for a manual length estimation (or XSET, as it was referred to at the time), which overrides the length estimation. I didn't see a comparison in the paper about which one provided the better answers, but having lots of options is always a good thing.

Moving along, my real complaint about this article is the analysis of their results compared to past results, which comes in two parts. (I told you I'd come back to it.)

The first complaint is what they were comparing against. The article was submitted for publication in May 2008, but they compared results to those published in the June 2007 Robertson article for STAT1. By August, our count of peaks had changed. By January 2008, several upgraded versions of FindPeaks were available, and many bugs had been ironed out. It's hardly fair to compare the June 2007 FindPeaks results to the May 2008 version of SISSR, and then declare SISSR the clear winner. Still, that's not a great problem - albeit somewhat misleading.

More vexing is their quality metric. In the Motif analysis, they clearly state that because of the large amount of computing power, only the top X% of reads were used in their analysis. For comparison with FindPeaks, the top 5% of peaks were used - and were able to observe the same motifs. Meanwhile, their claim to find 74% more peaks than FindPeaks, is not really discussed in terms of the quality of the additional sites. (FindPeaks was also modified to identify sub-peaks after the original data set was published, so this is really comparing apples to oranges, a fact glossed over in the discussion.)

Anyhow, complaints aside, it's good to see a paper finally compare the various peak finders out there. They provide some excellent graphics, and a nice overview on how their ChIP-Seq application works, while contrasting it to published data available. Again, I enjoyed the motif work, particularly that of figure 5, which correlates four motif variants to tag density - which I feel is a fantastic bit of information, buried deeper in the paper than it should be.

So, in summary, this paper presents a rather unfair competition by using metrics guaranteed to make SISSR stand out, but still provides a good read with background on ChIP-Seq, excellent illustrations and the occasional moment of deep insight.

Labels: Algorithms, article review, Chip-Seq