In discussing my work, I'm often asked how hard it is to write a peak calling algorithm. The answer usually surprises people: It's trivial. Peak calling itself isn't hard. However, there are plenty of pitfalls that can surprise the unwary. (I've found myself in a few holes along the way, which have been somewhat challenging to get out of.)

The pitfalls, when they do show up, can be very painful - masking the triviality of the situation.

In reality, the three most frustrating things that occur in peak calling:

- Maintaining the software

- Peak calling without unlimited resources eg, 64Gb RAM

- Keeping on the cutting edge

On the whole, each of these things is a separate software design issue worthy of a couple of seconds of discussion.

When it comes to building software, it's really easy to fire up a "one-off" script. Anyone can write something that can be tossed aside when they're done with it - but code re-use and recycling is a skill. (And an important one.) Writing your peak finder to be modular is a lot of work, and a huge amount of time investment is required to keep the modules in good shape as the code grows. A good example of why this is important can be illustrated with file formats. Since the first version of FindPeaks, we've transitioned through two versions of Eland output, Maq's .map format and now on to SAM and BAM (but not excluding BED, GFF, and several other more or less obscure formats). In each case, we've been able to simply write a new iterator and plug it into the existing modular infrastructure. In fact, SAM support was added in quite rapidly by Tim with only a few hours of investment. That wouldn't have been possible without the massive upfront investment in good modularity.

The second pitfall is memory consumption - and this is somewhat more technical. When dealing with sequencing reads, you're faced with a couple of choices: you either sort the reads and then move along the reads one at a time, determining where they land - OR - you can pre-load all the reads, then move along the chromosome. The first model takes very little memory, but requires a significant amount of pre-processing, which I'll come back to in a moment. The second requires much less cpu time - but is intensely memory thirsty.

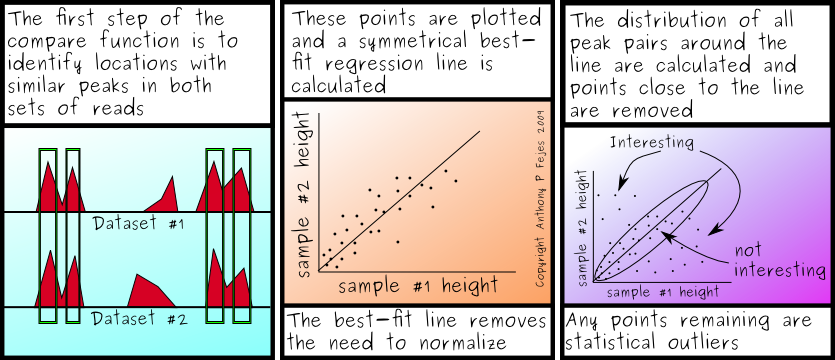

If you want to visualize this, the first method is to organize all of your reads by position, then to walk down the length of the chromosome with a moving window, only caring about the reads that fall into the window at any given point in time. This is how FindPeaks works now. The second is to build a model of the chromosome, much like a "pileup" file, which then can be processed however you like. (This is how I do SNP calling.) In theory, it shouldn't matter which one you do, as long as all your reads can be sorted correctly. The first can usually be run with a limited amount of memory, depending on the memory strucutures you use, whereas the second pretty much is determined by the size of the chromosomes you're using (multiplied by a constant that also depends on the structures you use.)

Unfortunately, using the first method isn't always as easy as you might expect. For instance, when doing alignments with transcriptomes (or indels), you often have gapped reads. An early solution to this in FindPeaks was to break each portion of the read into separate aligned reads, and process them individually - which works well when correctly sorted. Unfortunately, new formats no longer allow that - using a "pre-sorted" bam/sam file, you can now find multi-part reads, but there's no real option of pre-fragmenting those reads and re-sorting. Thus, FindPeaks now has an additional layer that must read ahead and buffer sam reads in order to make sure that the next one returned is the correct order. (You can get odd bugs, otherwise, and yes, there are many other potential solutions.)

Moving along to the last pitfall, the one thing that people want out of a peak finder is that it is able to do the latest and greatest methods - and do it ahead of everyone else. That on it's own is a near impossible task. To keep a peak finder relevant, you not only need to implement what everyone else is doing, but also do things that they're not. For a group of 30 people, that's probably not too hard, but for academic peak callers, that can be a challenge - particularly since every use wants something subtly different than the next.

So, when people ask how hard it is to write their own peak caller, that's the answer I give: It's trivial - but a lot of hard work. It's rewarding, educational and cool, but it's a lot of work.

Ok, so is everyone ready to write their own peak caller now? (-;

Labels: Algorithms, application development, Bioinformatics, Chip-Seq, Code planning, FindPeaks, programming, Vancouver Short Read Analysis Package